By: Brian A. Hemstreet, PharmD, FCCP, BCPS

http://www.ucdenver.edu/academics/colleges/pharmacy/Departments/ClinicalPharmacy/DOCPFaculty/H-P/Pages/Brian-Hemstreet,-PharmD.aspx

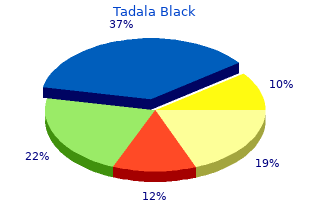

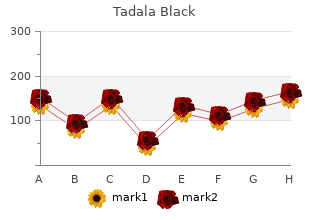

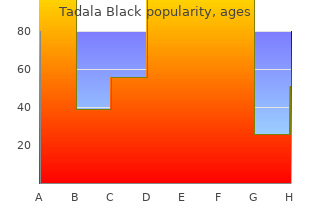

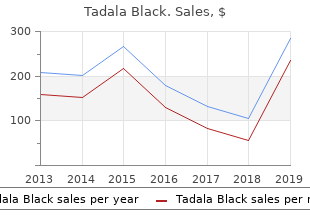

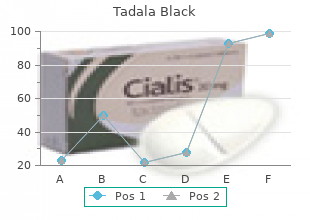

However erectile dysfunction 40 over 40 order tadala_black 80mg, the Geomagic software program (used for 3D image processing) impotence zoloft order tadala_black 80 mg without prescription, was not designed for craniofacial measurements and 152 | P a g e elicited vital difficulties erectile dysfunction zocor buy cheap tadala_black 80 mg line. The need for measuring the Euclidian distance between landmarks erectile dysfunction scrotum pump buy cheap tadala_black 80 mg, somewhat than the lateral distance required a particular assistance from the producer, although this assist was solely partial and time consuming. In addition, every of the 32 measurements were carried out and recorded individually, which made the landmarking process tedious and time-consuming, requiring roughly 20 minutes per image. Comparison of the measurements showed that lateral and floor measurements carried out on the 3D digital photographs were noticeably different. The 3D floor distances were longer than the lateral, with the latter more similar to the 2D measurements. The results of the comparability between 2D and 3D measurements are summarised in Tables 14, 15 and Figures 42, forty three. Since it was apparent that there was a pronounce distinction and given that 3D floor measurements symbolize the most enough information on facial features, it was concluded that each one the measurements must be calculated utilizing Euclidean coordinates of the craniofacial landmarks (representing the precise floor distance), which was carried out utilizing Microsoft Excel computerized spreadsheet. The 3D floor measurements were subsequently used as phenotypes for genetic association examine, as detailed in Chapter 5. Results of the comparability between craniofacial measurements in 2D and 3D photographs, together with lateral and floor distance. Volunteers 1 2 3 4 5 3D 3D 2D 3D 3D 2D 3D 3D 2D 3D 3D 2D 3D 3D 2D Lateral Surface picture Lateral Surface picture Lateral Surface picture Lateral Surface picture Lateral Surface picture n-gn 123. Graphical representation of the comparability between 2D and 3D measurements in people 1-5, primarily based on Table 14. Volunteers 6 7 8 9 10 3D 3D 2D 3D 3D 2D 3D 3D 2D 3D 3D 2D 3D 3D 2D Measurements Lateral Surface picture Lateral Surface picture Lateral Surface picture Lateral Surface picture Lateral Surface picture n-gn 117. Graphical representation of the comparability between 2D and 3D measurements in people 6-10, primarily based on Table 15. Introduction Reproducibility of the craniofacial measurements may be outlined as the power to obtain the identical outcome, with the identical (or different) examiner over a time frame (normally days to months). This idea represents one of the fundamental principles of the anthropometry and should be investigated totally, previous to conducting a last examine. An even higher degree of complexity exists when this process is carried out on a digital 3D image. Palpation is very required for measurement of the landmarks located on or around bony prominences, which are more reproducible, corresponding to left and right zygion (zy) and gonion (go). The inability to reach accurate location of specific landmarks, could introduce an error in subsequent measurements. The landmarks which will introduce an error in measurements embrace the next: trichion (tr), left and right exocanthion (ex) and endocanthion (en), labiale inferius (li), labiale superius (ls), left and right cheilion (ch) and stomion (sto). This current examine tested the reproducibility of the craniofacial landmarks allocation on a small subset of individuals by calculating derived distances. The results of this examine were used as a proof of idea and supplied a basis for assortment of a larger dataset. Materials and Methods In order to validate the reproducibility of the facial measurements, 13 3D photographs were analysed for a full set of 32 facial landmarks twice, as detailed in the Chapter 2. All facial landmarks were allocated manually, following the identical strict methodology. The Euclidean coordinates for 32 landmarks were exported into Microsoft Excel and 86 distances and ratios were calculated mechanically utilizing the formulae for linear and angular distances, as detailed in Chapter 2. Results and Discussion the goal of this examine was to consider the reproducibility and reliability of 86 facial measurements, obtained from 3D facial photographs. In digital photographs, the bony constructions mendacity underneath the soft tissue are neither seen nor out there for palpating. As a outcome, measurements requiring location of bone-associated landmarks (corresponding to gonion, zygion and glabella) may be much less reproducible on 3D laser-captured photographs. An a priori assumption was that measurements generated utilizing landmarks located on the lip and eye areas would generate more variation than measurements involving the nostril and ear landmarks (specifically the nasion, pronasale, subnasale and tragion), because these areas were captured with relatively low efficiency by the scanner. In basic, the data on landmarks in the eye and lips areas were limited as they were captured with low efficiency. The nasal area landmarks and tragion were the easiest to discover due to their outlined anatomical location. Due to the situation of the trichion (the hairline in the midst of the forehead) and given the issues with scan capture of the hair, that landmark was also expected to present more variation than others. Figure 44 reveals an instance of the variation between two observations that generated the minimum distinction between a lot of the first and the second measurements. In contrast, Figure forty five reveals an image, which generated the maximum distinction between these measurements. A 3D image, tested twice for location of 32 facial landmarks and generated minimum variance between a lot of the �old� and the �new� anthropometric measurements. Red circles point out pairs of landmarks, displaying vital distinction between two observations. A 3D image, tested twice for location of 32 facial landmarks and generated most variance between a lot of the �old� and the �new� anthropometric measurements. For linear distances, the best variance was observed between measurements involving paired landmarks, corresponding to gonion and zygion, with two samples producing a lot of the variability observed. A possible rationalization for this variability is poor image quality and basic problem in finding these landmarks. The evaluation of the typical values showed that go(r)-zy(r), zy(r)-gn and tr-zy(r) measurements (5. The relatively high variation in linear distances involving gonion, zygion and trichion may be defined by the issue in accurate location of those landmarks. On the opposite hand, greater than sixty two% of the measurements resulted in roughly 2 mm (or much less) distinction between the 2 observations. A abstract of fifty four linear measurements for 13 3D photographs with detailed average, minimum and most values. A abstract of 22 ratios between linear distances for 13 3D photographs with detailed average, minimum and most values. Forehead height ratio (tr-n*100/go(r)-go(l)) Upper face height ratio(n-sn*100/go(r)-go(l)) Lower face height ratio (sn-gn*100/go-go) Mandible index: (sto-gn)*100 /(go-go) Average 2. A abstract of 10 angular distances for 13 3D photographs with detailed average, minimum and most values. Nasal tip angle (n-prn-sn) Nasal vertical prominence (tr-prn-gn) Transverse nasal prominence (zy(l)-prn-zy(r)) Average 2. The analyses of the results involved solely basic descriptive statistics, as a more comprehensive evaluation was past the scope of this project. The comparability between the craniofacial ratios revealed that forehead/decrease face height index (Tr-g*100/sn-gn) demonstrated the best variance (8. This is most probably due to variation in location of the trichion, which may be covered by hair. Evaluation of the reproducibility of the angular distances revealed that the nasolabial angle (prn-sn-ls) showed the best variability of three. One pattern showed significantly higher variance due to poor digital capture of the lip area, which affected the accurate location of the labiale superius (ls). The general low variance in angular distances may be defined by the character of those measurements. In 3D space, the angular distance is mostly affected by the z-axis (the depth), which is normally unaffected through the landmark location process. However, the variance in landmarks location at x and y-axes also can have an effect on the angular distance. Table 19 reveals an instance of synthetic manipulation with x, y and z coordinates of the prn landmark. Notably, the subtraction in both y and z coordinates had a more pronounced effect than addition, while for the x coordinate it was the alternative. The landmarks that were principally affected by manipulation with coordinates location are highlighted in yellow. Conclusion It is essential to demonstrate reproducibility of facial measurements taken by different analysts or the identical examiner at different occasions.

Growth-inhibitory efects Pharmacol 234(5) erectile dysfunction medication wiki discount tadala_black 80mg fast delivery, 448�454 of the ketone body doctor for erectile dysfunction philippines order 80mg tadala_black with visa, monoacetoacetin erectile dysfunction is often associated with quizlet purchase 80 mg tadala_black otc, on human Paoli erectile dysfunction liver discount 80 mg tadala_black visa, A. Ketogenic food plan for weight problems: pal or gastric most cancers cells with succinyl-CoA, three-oxoacid foe. Toxicol Appl Pharmacol cose transporter kind I defciency (G1D): modula 10(1),one hundred sixty� 164 tion of human ictogenesis, cerebral metabolic rate, Scalf, L. Ketone supplementation 630� 634 decreases tumor cell viability and prolongs sur Seyfried, T. Weight loss leads Metabolic efects of exogenous ketone to reductions in infammatory biomarkers afer a supplementation�an alternate or adjuvant to very-low-carbohydrate food plan and a low-fats food plan in the ketogenic food plan as a most cancers therapy. Suppression of oxidative stress by beta of therapeutic ketosis induced by oral administra hydroxybutyrate, an endogenous histone deacety tion of R,S-1,three-butanediol diacetoacetate. Archives of Disease in Childhood 61, vate, and of alloxan-diabetes and hunger, on the 1168� 1172. Efect of power substrate cation of the Federation of American Societies for manipulation on tumor-cell proliferation in paren Experimental Biology 26, 2351�2362. Ketogenic diets in the therapy of for 27 days increases fats oxidation and power 327 Chapter 32: Ketone Supplementation for Health and Disease 327 expenditure without leading to modifications in body Veech, R. Int J Obes Relat our bodies: the efects of ketone our bodies in pathological Metab Disord 27(1), 95�102. Prostaglandins Leukot Essent Fatty Acids 70, expenditure and decrease adiposity in chubby 309� 319. Loss of acetoac Canonical Nlrp3 infammasome links systemic etate coenzyme A transferase activity in tumours low-grade infammation to functional decline in of peripheral tissues. Contribution of brain glucose and ketone tem: clinical, cellular, and molecular features. It now provides a key therapeutic method it was thought of to act by way of the era of for the therapy of youngsters with drug-resistant ketones (Bough and Rho, 2007; Rho and Stafstrom, epilepsy (Levy et al. The food plan entails poorly correlates with anticonvulsant efcacy, and a stringent reduction in carbohydrate consumption, with this ketone-based mostly mechanism has not been widely an elevated consumption of medium chain fatty supported in animal mannequin studies (Likhodii et al. Recently, ing round 306 �M; and decanoic acid to 87�552 nonetheless, an unbiased display screen for medium and �M with a mean of 157 �M (Dean et al. In this research, a simple non-animal ent in brain at 60%�80% of serum levels (Wlaz mannequin, by which valproate has been shown to reg et al. In this research, over 60 of gastrointestinal-related side efects, similar to compounds had been screened for an inhibitory efect cramps, bloating, diarrhea, and vomiting (Liu, on rapid phosphoinositide turnover. Use of the food plan has additionally been limited by this inhibitory efect has since been confrmed as poor tolerability, especially in adults, ensuing a therapeutic mechanism for valproate in in vitro 329 Chapter 33: Molecular Mechanism of the Medium Chain Triglyceride (Ketogenic) Diet 329 and in vivo animal seizure models (Chang et al. Tese potent compounds included decanoic noic acid completely blocked seizure activity 35 acid, nonanoic acid, and the branched 8-carbon minutes post addition. Tese compounds had been further loctanoic acid) and a few exhibiting no activ tested in a well-established ex vivo mammalian ity (three,7-dimethyloctanoic acid). Tese results mannequin for drug-resistant epilepsy, the low mag confrmed earlier studies (Chang et al. Here, brain slices had been kept chemical house exhibiting potential efcacy in �alive� in a shower perfused with oxygenated arti seizure control. Tese experiments at 1 mM, a focus significantly greater therefore advised a direct role for medium chain than that of medium chain fatty acids discovered fatty acids in seizure control. The widely used, established epilepsy therapy, valproate, confirmed weak activity on this seizure mannequin. This most potent compounds branched around the research additionally confirmed that medium chain fatty acids ffh carbon. This therapeutic efect can be unlikely to the fourth to the seventh carbon (Chang et al. Based on these results, three related com idly reduced seizure activity inside 10 minutes kilos had been then examined: 4-ethyloctanoic of therapy. Tese compounds One further research has been reported, investi had been further screened in a spread of in vivo seizure gating the breadth of chemical house for medium models (Table 33. In a related research, mice treated acid�related compounds, including a scientific with decanoic acid by gastric gavage (30 mmol/ evaluation of methyloctanoic acid derivatives. Tese data a clear construction activity relationship was seen provide evidence that medium chain fatty acids, in seizure control, offered by the place of of defned construction, show activity in a spread of in branching on the octanoic acid spine. The molecular mechanism of decanoic acid in First, since throughout seizure activity, membrane direct seizure control has lately been estab depolarization happens, rising excitability, lished (Chang et al. In this research, deca decanoic acid was analyzed at varying membrane noic acid (1 mM) was shown to abolish seizure potentials. As beforehand described, decanoic acid tially offering stronger inhibition throughout blocks seizure activity inside 15 minutes of addi seizure activity. Tus, due to the burgeon use of Perampanel is restricted by neuropsychiatric ing evidence that fatty acids play a main role side efects, specifically aggression (Rugg-Gunn, in the food plan�s therapeutic efect and despite the 2014; Steinhof et al. A recent maceutical reagents based mostly on the mechanisms of research has identifed a task for decanoic acid in decanoic acid will hopefully not trigger this side regulating mitochondrial proliferation (Hughes efect. Using the trans tically relevant concentrations (250 M) over a 6 membrane domains of GluA2, a putative deca day interval in a neuronal cell line and in human noic acid�binding region was identifed in the fbroblasts. Interestingly, this efect was not shown M3 helix, thought to be involved in regulating by octanoic acid. This web site is distinct mitochondrial load has been advised to shield from that reported for Perampanel (Szenasi et al. Early studies of medium Over the last 30 years, many studies have sug chain fatty acids show appreciable variation in gested a task for ketones in seizure control and plasma fatty acid levels over a 24-hour interval (Sills neuroprotection (Bough and Rho, 2007; Rho et al. In some forms of epilepsy, bohydrate load with the food plan is necessary to primary ketones could provide an alternate power supply tain decanoic acid levels at an efective stage, and for the brain (Kim et al. Ketones have additionally been demonstrated to alter blood fatty acids somewhat than simply ketone levels. Several concerns utes to seizure-activity and is rescued by valproic are needed right here. Seizure control by resistant to metabolic degradation could overcome derivatives of medium chain fatty acids related the stringent dietary regime. Developing novel with the ketogenic food plan show novel branching chemical structures that show enhanced binding to point construction for enhanced potency. J Pharmacol the goal web site on the receptor may scale back the Exp Ter 352, 43�fifty two. Medium ods update: understanding the causes of epileptic chain triglycerides: an update. Pharmacological characterization of the 6 evaluation of brain and plasma for octanoic and dec Hz psychomotor seizure mannequin of partial epilepsy. Histone deacetylase is a goal medium-chain fatty acids acutely scale back phos of valproic acid-mediated cellular diferentiation. Seizure control from kids with intractable epilepsy treated by ketogenic food plan-related medium chain fatty with medium-chain triglyceride food plan. Role of octanoic and decanoic acids in the of 10-carbon fatty acid as modulating ligand of control of seizures. Molecular survey of clinical experiences with perampanel in targets for antiepileptic drug development. Pharmacogenetics in receptor antagonists and their neuroprotective mannequin techniques: Defning a standard mecha efects. Glutamate hyperexcitability and seizure Attenuation of phospholipid signaling provides a like activity throughout the brain and spinal wire novel mechanism for the action of valproic acid. Valproic acid protects experimental therapies for standing epilepticus towards haemorrhagic shock-induced signalling in preclinical development. This is expected to lead to low oxaloacetate happens in cytosol, is the main pathway of metabolic levels, which then can scale back the binding of ace breakdown of glucose into pyruvate. In cardio metabolism, afer is the main reflling pathway, thought to be tak citrate synthase transfers the acetyl-group onto ing place in astrocytes (Patel, 1974). This is in contrast to lengthy chain fatty acids, which imbalances between excitation and inhibition.

Reducing the anchor by an element of 50 (relative to erectile dysfunction treatment by injection generic 80mg tadala_black with mastercard motorized vehicle deaths) reduced respondents� estimates by an element of 2�5 erectile dysfunction treatment exercise purchase tadala_black 80 mg visa. Having estimates differ by less than a tenth of the variation in anchors indicates the energy of these beliefs erectile dysfunction 19 year old male order 80 mg tadala_black overnight delivery. Another sign of robustness was the sturdy correlation between estimates produced with the two anchors impotence natural treatment clary sage discount tadala_black 80mg online. That ordering was also strongly correlated with one implied by relative frequency judgments for 106 paired causes of demise. Thus, regardless of the novelty of these duties, topics revealed consistent beliefs, moderately predicted by availability and anchoring, however the question was requested. For predicting which causes of demise most occupy individuals, an ordering may be sufficient (Davies, 1996; Fischer et al. For predicting which dangers individuals find acceptable, absolute estimates are needed (as are absolute estimates of the related advantages). Such predictions require delineating the situations beneath which real-world estimates are made. Are dangers and advantages viewed in a typical framework, reducing the necessity for absolute estimates. It takes much more delineation to reply the question that prompted this, and far different, research into danger perceptions: Do individuals know enough to assume a accountable function in managing dangers, whether or not in their lives or in society as a whole. The picture of potential competence that advanced from that research is considered one of behavioral decision research�s more seen impacts. The very act of conducting such research makes a political statement: claims relating to public competence have to be disciplined by truth. Some partisans wish to depict an immutably incompetent public, so as to justify protecting rules or deference to technocratic experts. Other partisans wish to depict an already competent public, in a position to fend for itself in an unfettered marketplace and participate in technological selections. The final of these relationships indicates that folks can monitor what they see, even when they don�t notice how unrepresentative the observed proof is. The case for the glass being half-empty rests on (1) the enormous range of the statistical frequencies, making some correlation virtually inevitable (may individuals get through life, with out figuring out that cancer kills more individuals than botulism. The final of these weaknesses may be exploited by these (scaremongers or pacifiers) hoping to manipulate the availability of particular dangers. These outcomes inform the talk over public competence solely to the extent that public responses to danger rely upon perceived frequency of demise. Subsequent research found, however, thatrisk has a number of meanings (Slovic, Fischhoff, & Lichtenstein, 1980). When requested to estimate danger (on an open-ended scale with a modulus of 10), lay topics rank some sources similarly for danger and common yr fatalities, but others fairly in another way. However, a pattern of technical experts treated the two definitions of danger as synonymous. An early candidate for such a danger issue wascatastrophic potential: Other things being equal, individuals may see larger danger in hazards that may declare many lives directly, as seen perhaps in particular concern about plane crashes, nuclear power, and the like. Some experts even proposed elevating the variety of deaths in each attainable accident sequence to an exponent (>1), when calculating expected fatalities (Fischhoff, 1984). That idea faded when they realized that, with giant accidents, the exponent swamps the rest of the evaluation. Moreover, the empirical research instructed that catastrophic potential per se was not a driving issue (Slovic, Lichtenstein, & Fischhoff, 1984). If so, then unsure danger administration might have scared audiences more than potential physique counts in movies like the China Syndrome and A Civil Action. The correlation between catastrophic potential and uncertainty first emerged in behavioral research predicting danger preferences. Its impetus was Starr�s extensively cited (1969) declare that, for a given profit, the general public tolerates larger danger if it is incurred voluntarily. As proof, he estimated fatality dangers and financial advantages from eight sources. In a plot, he sketched two parallel �acceptable danger� strains, an order of magnitude aside, for voluntary and involuntary dangers. Although seminal, the paper was technically fairly tough, when it comes to sources, estimates, and statistics (Fischhoff et al. More fundamentally, it assumed that folks�s danger and profit perceptions matched their statistical estimates when determining acceptable commerce-offs. A simple approach to check it was asking citizens to judge technologies when it comes to danger, advantages, voluntariness and different potentially related danger components. A perception that the suitable level of danger for a lot of (but not all) technologies was decrease than present levels (contrary to Starr�s hypothesis of a socially determined balance). A vital correlation between judgments of present advantages and acceptable danger levels (indicating a willingness to incur larger danger in return for larger profit). A giant enchancment in predicting acceptable danger from perceived advantages, when many danger components were partialed out, indicating the need for a double normal. This research has helped many institutions to notice that danger entails more than simply common yr fatalities when setting their danger-administration policies. Predicting public responses to particular technologies requires research like that of the causes-of-demise examine: looking at how, and the way accurately, individuals understand the components determining their judgments of danger (together with which heuristics are invoked and the place they lead). If biases seem believable, even predictable, then it behooves researchers to assist cut back them. The first step in that course of is establishing that bias has, actually, been demonstrated (the topic of Table 41. Clear normative requirements are essential to defending the general public from interventions that promote some perception, beneath the guise of correcting poor lay judgments. For instance, a well-liked strategy for defending dangerous actions is evaluating them with different, apparently accepted dangers. Living next to a nuclear power plant has been in comparison with consuming peanuts, which the carcinogenic fungus, aflatoxin, can contaminate. To paraphrase an historic commercial, the implication is that, �If you like Skippy (Peanut Butter), you�ll love nuclear power. People moderately care about the related advantages, as well as the societal processes determining danger-profit tradeoffs. A given danger should be much less acceptable if it provides much less profit or is imposed on these bearing it (eroding their rights and respect) (Fischhoff, 1995; Leiss, 1996). When bias may be demonstrated, figuring out the accountable heuristic has both utilized and normative worth. For instance, invoking availability means attributing bias to systematic use of systematically unrepresentative proof. Failing to adjust for biased sampling may reflect inadequate skepticism (taking observations at face worth) or inadequate knowledge (about the way to adjust). Being ignorant in these methods is sort of completely different from being stupid or hysterical, charges that often comply with allegations of bias. In this mild, both citizens and scientists want the identical factor: higher information, together with higher understanding of its limits. Similarly, attributing concern over catastrophic potential to concern over the related uncertainty shifts attention to whether or not individuals have the metacognitive skills for evaluating technical proof. It reduces the importance of any biases induced by listening to worst-case evaluation scenarios. It also implies a more easily defended normative principle: worrying about how nicely a know-how is understood, quite than whether or not casualties occur directly or are distributed over time. Concern over catastrophic potential means creating incentives for finding technologies remotely and disincentives for investing in technologies that would affect many individuals directly. Without direct research into what citizens need and consider, policies might be mistakenly created in their name (Fischhoff, 2000a, 2000b). The central function played by professional judgment in public policy has evoked a stream (though not fairly a torrent) of concern relating to its quality (National Research Council, 1996). The heuristics-and-bias literature has persuaded some analysts that experts are like lay individuals, when forced to transcend their knowledge, historical records, and specialized procedures � and depend on judgment.

In every group erectile dysfunction psychological causes treatment buy tadala_black, half of the themes were promised that the five most correct topics would obtain $20 every erectile dysfunction protocol video buy cheap tadala_black 80 mg on line. Each topic evaluated considered one of two completely different lists of causes erectile dysfunction photos purchase discount tadala_black on-line, constructed such that she or he evaluated both an implicit hypothesis erectile dysfunction medication for high blood pressure buy tadala_black 80mg otc. Each sort had three parts, considered one of which was additional divided into seven subcomponents. To avoid very small possibilities, we conditioned these seven subcomponents on the corresponding sort of demise. To present topics with some anchors, we knowledgeable them that the probability or frequency of demise resulting from respiratory illness is about 7. Mean Probability and Frequency Estimates for Causes of Death in Study 1: Comparing Evaluations of Explicit Disjunctions with Coextensional Implicit Disjunctions Note: Actual percentages were taken from the 1990 U. Specifically, the former equals 58%, whereas the latter equals 22% + 18% + 33% = 73%. This index, known as the unpacking factor, could be computed instantly from probability judgments, in contrast to w, which is outlined when it comes to the help function. Subadditivity is indicated by an unpacking factor larger than 1 and a value of wless than 1. An evaluation of medians somewhat than means revealed an analogous pattern, with somewhat smaller differences between packed and unpacked versions. Comparison of probability and frequency duties showed, as expected, that topics gave higher and thus more subadditive estimates when judging possibilities than when judging frequencies, F (12, one hundred and one) = 2. The judgments typically overestimated the precise values, obtained from the 1990 U. The solely clear exception was coronary heart illness, which had an precise probability of 34% but received a imply judgment of 20%. Because topics produced higher judgments of probability than of frequency, the former exhibited larger overestimation of the actual values, but the correlation between the estimated and precise values (computed separately for every topic) revealed no difference between the 2 duties. The following design supplies a more stringent test of help principle and compares it with alternative models of belief. Suppose A1, A2, and B are mutually unique and exhaustive; A = (A1 A2);A is implicit; and A is the negation of A. Consider the next observable values: Different models of belief indicate completely different orderings of these values: help principle, = Bayesian mannequin, = = = belief function, = = regressive mannequin, = =. Support principle predicts and because of the unpacking of the focal and residual hypotheses, respectively; it additionally predicts = because of the additivity of express disjunctions. The Bayesian mannequin implies = and =, by extensionality, and =, by additivity. Shafer�s principle of belief capabilities additionally assumes extensionality, however it predicts because of superadditivity. The above knowledge, as well as numerous studies reviewed later, reveal that <, which is according to help principle but inconsistent with both the Bayesian mannequin and Shafer�s principle. The remark that < may be defined by a regressive mannequin that assumes that probability judgments satisfy extensionality but are biased toward. However, this mannequin predicts no difference between and, every of which consists of a single judgment, or between and, every of which consists of two. Thus, help principle and the regressive mannequin make completely different predictions in regards to the supply of the difference between and. To distinction these predictions, we requested completely different groups (of 25 to 30 topics every) to assess the probability of assorted unnatural causes of demise. All topics were told that an individual had been randomly chosen from the set of people that had died the previous 12 months from an unnatural trigger. The hypotheses beneath research and the corresponding probability judgments are summarized in Table 25. The first row, for instance, presents the judged probability that demise was caused by an accident or a homicide somewhat than by some other unnatural trigger. In accord with help principle, = 1 + 2 was significantly larger than = 1 + 2, p <. Before turning to further demonstrations of unpacking, we discuss some methodological questions regarding the elicitation of probability judgments. It could be argued that asking a topic to evaluate a specific hypothesis conveys a delicate (or not so delicate) suggestion that the hypothesis is quite possible. Subjects, subsequently, might treat the truth that the hypothesis has been dropped at their attention as details about its probability. Subjects were requested to write down the final digit of their telephone numbers and then to evaluate the proportion of couples having exactly that many youngsters. They were promised that the three most correct respondents would be awarded $10 every. As predicted, the entire percentage attributed to the numbers 0 via 9 (when added across completely different groups of topics) greatly exceeded 1. Thus, subadditivity was very a lot in evidence, even when the choice of focal hypothesis was hardly informative. Subjects overestimated the proportion of couples in all classes, except for childless couples, and the discrepancy between the estimated and the precise percentages was best for the modal couple with 2 youngsters. Furthermore, the sum of the chances for 0, 1, 2, and three youngsters, every of which exceeded. In sharp distinction to the subadditivity observed earlier, the estimates for complementary pairs of occasions were roughly additive, as implied by help principle. The finding of binary complementarity is of particular interest because it excludes an alternative explanation of subadditivity in accordance with which the analysis of evidence is biased in favor of the focal hypothesis. Subadditivity in Expert Judgments Is subadditivity confined to novices, or does it additionally hold for specialists. Redelmeier, Koehler, Liberman, and Tversky (1995) explored this query in the context of medical judgments. They offered physicians at Stanford University (N= 59) with a detailed scenario regarding a girl who reported to the emergency room with abdominal pain. Half of the respondents were requested to assign possibilities to two specified diagnoses (gastroenteritis and ectopic being pregnant) and a residual category (none of the above); the opposite half assigned possibilities to five specified diagnoses (including the 2 offered in the different situation) and a residual category (none of the above). Subjects were instructed to give possibilities that summed to one because the chances into consideration were mutually unique and exhaustive. If the physicians� judgments conform to the classical principle, then the probability assigned to the residual category in the two-analysis situation ought to equal the sum of the chances assigned to its unpacked parts in the five-analysis situation. Consistent with the predictions of help principle, however, the judged probability of the residual in the two analysis situation (imply =. In a second research, physicians from Tel Aviv University (N = 52) were requested to consider several medical eventualities consisting of a one-paragraph assertion including the patient�s age, gender, medical history, presenting symptoms, and the results of any checks that had been performed. One scenario, for instance, concerned a sixty seven-12 months-previous man who arrived in the emergency room struggling a coronary heart assault that had begun several hours earlier. Each physician was requested to assess the probability of one of the following 4 hypotheses: patient dies throughout this hospital admission (A); patient is discharged alive but dies within 1 12 months (B); patient lives more than 1 but less than 10 years (C); or patient lives more than 10 years (D). Throughout this chapter, we check with these aselementary judgments because they pit an elementary hypothesis against its complement, which is an implicit disjunction of all of the remaining elementary hypotheses. After assessing considered one of these 4 hypotheses, all respondents assessed P(A, B), P(B, C), and P(C, D) or the complementary set. We check with these as binary judgments because they contain a comparability of two elementary hypotheses. The means of the 4 groups in the previous example were 14% for A, 26% for B, 55% for C, and sixty nine% for D, all of which overestimated the precise values reported in the medical literature. In problems like this, when individual parts of a partition are evaluated against the residual, the denominator of the unpacking factor is taken to be 1; thus, the unpacking factor is solely the entire probability assigned to the parts (summed over completely different groups of topics). In sharp distinction, the binary judgments (produced by two completely different groups of physicians) exhibited near-perfect additivity, with a imply whole of a hundred. Further evidence for subadditivity in professional judgment has been supplied by Fox, Rogers, and Tversky (1996), who investigated 32 professional choices merchants at the Pacific Stock Exchange. These merchants made probability judgments regarding the closing value of Microsoft inventory on a given future date. Microsoft inventory is traded at the Pacific Stock Exchange, and the merchants are commonly concerned with the prediction of its future value.

Cheap 80mg tadala_black visa. My erectile dysfunction is gone forever.

Raleigh Office:

5510 Six Forks Road

Suite 260

Raleigh, NC 27609

Phone

919.571.0883

Email

info@jrwassoc.com